How Well Can Computers Hear Us?

When we talk about speech-to-text accuracy, we are asking a simple question: How well does a computer understand the words I say? Think of it like a game of Simon Says. If the computer does exactly what you say, it gets a high score. If it messes up, it gets a low score.

Why Getting Words Right Is a Big Deal

Have you ever told a grown-up what you want for your birthday? Imagine you say, “I want a big, red fire truck.” If they hear you right, you get the toy you wanted. Awesome!

But what if they hear “I want a pig, fed wire luck”? That’s silly, and you don’t get your fire truck. This mix-up shows why it’s so important for computers to hear our words correctly. When the words are wrong, things can get funny, but also very messy.

How Good Hearing Helps Us

When a computer has a great “ear,” it can help us a lot. It’s not just for toys.

Here are some real examples:

- Sending a text with full hands: Imagine you are holding a yummy ice cream cone and need to text your friend. You can just say the words to your phone, and it types them for you. If it hears you right, your friend will know you’re on your way.

- Helping doctors: A doctor can talk to a computer about a sick person, and the computer writes it all down. This lets the doctor spend more time helping people and less time typing. One wrong word here could be a big problem.

- Talking to your car: New cars let you say things like “Call Mom” or “Find the closest pizza place.” The car has to get it right every time so you can keep your hands on the wheel and your eyes on the road.

The best speech-to-text tools work even when it’s noisy. Some can get over 99 words right out of 100. That’s like getting a 99 on a spelling test! For people whose jobs need perfect words, this is super important.

The Problem With Bad Hearing

When a computer can’t hear well, it can make big mistakes. It’s like a smart speaker that plays the wrong song. Or a map that sends you to the wrong street. It’s a big waste of time.

Every time words get mixed up, it’s like a game of telephone where the last person hears something completely different. For important jobs like helping people who are sick, these mix-ups can change the meaning of everything. That’s why we need computers to be the best listeners they can be.

If you want to learn more ways to use your voice with a computer, check out our guide on how to use voice-to-text.

How We Grade a Computer’s Listening

So, how do we know if a computer is a good listener? We give it a grade, just like a teacher gives you a grade on a test. The name for this grade is the Word Error Rate, or WER.

Think of WER as a “mistake score.” It tells us how many mistakes the computer made when it turned your spoken words into typed words.

Imagine you say 100 words. If the computer gets only one word wrong, its mistake score, or WER, is 1%. That means its speech to text accuracy is 99%. That’s an A+! We always want the mistake score to be very low. A low score means the computer is a great listener.

The Three Kinds of Mistakes

To really understand the grade, we need to know the three kinds of mistakes a computer can make.

- Swapping a word (Substitutions): This is when the computer hears one word but types a different one that sounds the same. You say, “I see a bee,” and it types, “I see a pea.”

- Adding a word (Insertions): Sometimes, the computer adds a word you did not say. You say, “I like cake,” but it types, “I like the cake.” It added the word “the.”

- Forgetting a word (Deletions): This is when the computer misses a word completely. You say, “I want a big red ball,” and it just writes, “I want a red ball.” The word “big” is gone.

To get the mistake score (WER), you add up all the mistakes (swaps + adds + forgets). Then you divide that number by how many words you said. The smaller the answer, the better the computer listened.

Seeing The Grade in Action

Let’s try a small test. You say this sentence to your computer:

“The quick brown fox jumps.” (That’s 5 words.)

The computer tries to listen and types this:

“The quick browns fox over.”

Let’s grade its work. It made two mistakes:

- “browns” is a swap for “brown.”

- “over” is a swap for “jumps.”

The computer made 2 mistakes in a short 5-word sentence. You can see how these little mistakes can add up in a long story, changing what it means.

This is where things in the real world matter.

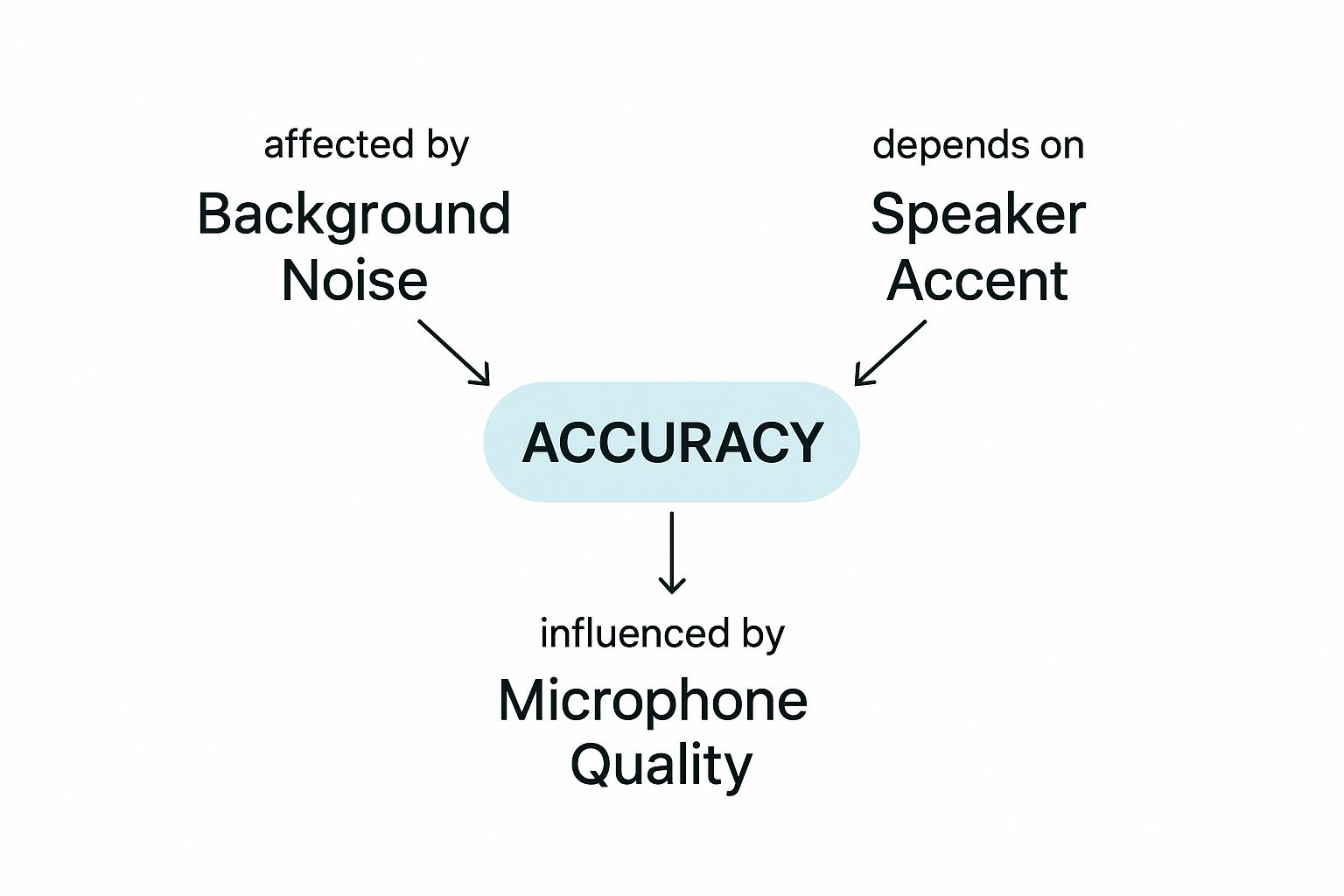

As the picture shows, things like noise, a bad microphone, or even talking with a different accent can change how well the computer hears. A quiet room and a good microphone are your best friends for getting the words right.

When a company says its tool is 99% accurate, they mean it has a very low mistake score—just one mistake for every 100 words. This is what you need for important work. For Apple users, finding a good tool is important. We made a guide on the best speech to text solutions for Mac OS.

Understanding the mistake score helps you pick the right tool and use it in the best way.

How We Taught Computers to Listen

A long time ago, computers were very bad at listening. Think of them like a baby that can only understand a few sounds from one person who talks very slowly. It was nothing like the smart helpers we have today, but it was a start.

Making speech to text accuracy better was a slow job. At first, scientists were happy when a computer could tell the difference between “one” and “two” in a perfectly quiet room. Every new word the computer learned was a big deal.

From a Few Words to a Smart System

The computer slowly grew up. A big step happened in the 1970s. A computer named Harpy was like a toddler. It could understand about 1,000 words—the same as a three-year-old child. This was great because Harpy could guess what word might come next, which helped it understand better. You can read more about the early history of speech recognition to see how it all started.

But even then, computers were still not great listeners. By the 2000s, the best computers were only right about 80% of the time in a quiet lab. If there was noise or someone talked a little differently, that number went way down. The computers were missing something important to really understand us.

It turned out the answer wasn’t just a stronger computer. The computers needed to listen to lots and lots of real people talking.

The Internet Changed Everything

The big change came when companies like Google had an idea. They had a secret tool: the internet. Google started to let its computers learn from all the words people typed into Google search—more than 230 billion words. Instead of being taught a small list of words, the computers could now learn from how real people talk and ask questions.

This was like sending the computer to the biggest school in the world. By seeing how real people write, the computer started to learn patterns, funny words, and how we put sentences together. It began to understand that words are not just sounds, but tools we use to talk to each other.

All this new information, plus super-powerful computers, was the magic trick. It’s what made speech to text accuracy get so much better. The talking helpers in our phones and homes all got smart because computers finally had enough examples to become great listeners.

What Helps or Hurts a Computer’s Hearing

Have you ever wondered why your smart speaker understands you one minute, but gets your favorite song wrong the next? A computer’s “hearing” can be tricky. Many things can help it hear better or make it harder for it to understand you.

Think of it like talking on a phone with bad service. If the sound is clear and the room is quiet, you hear everything. But if a dog is barking, you will miss some words. Speech-to-text tools have the same problem.

Every one of these problems can hurt speech to text accuracy. The clearer your voice is for the computer, the better it can do its job.

Things That Make It Hard For Computers To Listen

A computer doesn’t hear like a person. It looks for patterns in sound waves that match words it knows. When other sounds get in the way, the patterns get messy, and the computer gets confused.

Here are some common problems:

- Loud Noises: A busy room, a fan, or a TV can make it hard for the computer to hear your voice clearly.

- Talking Too Fast: If you talk too quickly, your words can mush together. The computer won’t know where one word ends and the next one starts.

- More Than One Person Talking: If many people talk at once, the computer can’t tell who is saying what. It often mixes all the words into silly sentences.

One smart computer made 73% fewer mistakes from loud noises than another one. This shows that how a tool is built really matters for using it in the real world.

Even the way you talk can be a problem. If you have a strong accent, mumble your words, or talk very softly, it can be hard for the computer to match the sounds to the right words.

Your Microphone is the Computer’s Ear

What you use to talk to the computer is very important. Your microphone is the computer’s ear. A bad ear means bad hearing.

The little microphone built into a laptop is not very good. It picks up sounds from your keyboard and other noises in the room. A good microphone that you plug in is much better. It is made to listen only to your voice and block out other sounds.

This is why people who talk to computers for their jobs—like doctors or writers—often use a good headset. It keeps the microphone close to their mouth and helps them get a clear sound.

Making the Computer Smarter with Your Words

What happens if you use a word the computer has never heard before? This is a problem for people who use special words in their jobs, like doctors or scientists. A doctor might say the name of a medicine, and the computer types a word that sounds the same but is wrong.

To fix this, cool tools like WriteVoice let you teach the computer new words. You can make a list of special words, names, or company names. This helps the computer learn your words, which makes speech to text accuracy much better for you.

To make it easy, here is a list of what helps and what hurts.

Simple Ways to Get Better Hearing from Your Computer

| What Helps (Do This!) | What Hurts (Don’t Do This!) |

|---|---|

| Talk in a quiet room. | Have the TV or music on. |

| Use a good microphone close to your mouth. | Use the microphone built into your laptop. |

| Speak clearly and not too fast or too slow. | Mumble or talk really fast. |

| Teach the computer your special words. | Use words it has never heard before. |

| Make sure only one person talks at a time. | Have many people talk at once. |

If you remember these simple rules, you can make your computer a much better listener. You’ll spend less time fixing mistakes and more time getting your ideas down.

The Time of Smart Voice Helpers

Remember the first time you talked to a phone and it actually understood you? Maybe it was Siri, Alexa, or the Google Assistant. That amazing moment started a new time for voice tools.

All the big companies started a race to build the smartest computer that could listen. This race was great for us because it made speech to text accuracy get better very, very fast. We were watching computers learn to understand us almost as well as another person can.

A Race to Be the Best

What was the secret that made this happen? It was a very smart kind of computer brain called deep learning. Instead of just following rules, these computers could learn from listening to millions of hours of real people talking. This taught them to understand different ways of speaking and even ignore loud noises.

This race made things get better super fast. In just a few years, voice helpers went from being a fun toy to being in everyone’s house. The companies were all trying to get the lowest mistake score. For example, one company had a mistake score of 6.9% in 2016. The next year, another company beat them with a score of 5.9%. You can learn more about this cool story in the history of speech-to-text technology.

Because of this race, the tools we use every day got smarter and much easier to use.

Before this time, talking to a computer was hard. Afterward, it became a normal and helpful part of our day.

How Good Hearing Made Voice Helpers Useful

Getting the words right wasn’t just for fun. It made voice commands really work. For the first time, you could do helpful things without using your hands.

This super high speech to text accuracy let us do things like:

- Control Things with Your Voice: You could finally ask your speaker to play a certain song, set a kitchen timer while cooking, or get the weather without having to ask three times.

- Make Homes Smarter: Voice helpers became the boss of the smart home. They could understand what you meant when you said to turn down the lights or lock the doors.

- Get Help While Driving: Asking for directions in the car became safe and easy. The computer could finally understand street names and places correctly.

Because of all this hard work, the best computers can now get over 94 words out of 100 right in a quiet room. That amazing improvement is why talking to our phones and speakers feels so normal today.

Why Human Ears Are Still the Best

https://www.youtube.com/embed/LbX4X7FI

Even with all the smart computers, the best listening tools in the world are the two ears on your head. A computer can know millions of words, but it doesn’t really understand them like a person does.

Think of it like this: a computer is like a kid who has memorized a whole dictionary. They know what every word means, but they won’t get a joke or understand if you are feeling sad just by the sound of your voice. A person hears the whole story, not just the words.

Our brains are just amazing at figuring things out.

The Big Difference Is Understanding

A computer’s job is to match sounds to words it knows. But a human brain does a lot more.

We don’t just hear words; we understand what’s going on around them. If your friend drops their ice cream and says, “Oh, that’s just great,” you know they are not happy at all. A computer would just write down the words and miss the real feeling.

This is why a person’s speech to text accuracy is still the best. We understand the small clues that change everything.

Here is what our brains do that is still hard for computers:

- Feeling emotions: We can tell if someone is happy or mad just by how their voice sounds.

- Getting jokes: We know when someone is being funny or saying the opposite of what they mean.

- Ignoring noise: In a noisy lunchroom, we can listen to just one friend and block out all the other sounds.

A computer hears words. A person understands the message. This is why for very important jobs, we still need a person to listen.

Why Perfect Words Are So Important

In some jobs, a small mistake is a very big deal. Think about a courtroom or a doctor’s office, where every single word matters a lot.

Imagine someone in court says, “I can’t be sure,” but the computer types, “I can be sure.” That one little word changes the whole meaning and could get someone in big trouble. Or, if a doctor says a sick person needs 50 drops of medicine and the computer writes 15, that could be very dangerous.

This is why for really important things, people and computers work together. A computer quickly types out the words, and then a person listens and fixes the mistakes. This way, you get the speed of a computer and the amazing hearing of a human.

How Good Are Computers in the Real World?

Computers are getting better, but they are still not as good as a person, especially when the sound is not perfect. If there is noise, people talking differently, or special words, the computer can make a lot of mistakes.

Studies show that with messy sounds, computers might only be right about 62% of the time. A person who is paid to write down words is right almost 99% of the time. That big difference means a person often has to fix a lot of a computer’s work. You can discover more about these AI vs human transcription statistics to see how they compare.

So, while computers are amazing, a person’s touch is still needed when the words have to be perfect.

Common Questions About Speech-to-Text Accuracy

We have learned a lot about how computers listen. You might still have some questions about how it all works. Let’s answer a few common ones.

One big question is about keeping things private. When I talk, who is listening? Good tools are made to keep your words safe. They don’t save what you say. Your private talks should stay private.

What about different ways of talking? Will the computer understand me? Yes! Today’s computers have learned from people all over the world. They are much better at understanding how different people talk. A very strong accent might still be tricky, but they are getting better all the time.

What About Loud Noises?

Your house is not a quiet studio. What happens if the dog is barking or you’re in a busy place? Can the computer still hear you?

This is where the really good tools shine. Some of the best computers make 73% fewer mistakes from loud noises than older ones. They are getting very good at finding your voice in a noisy room. But for the very best results, a quiet spot is always a good idea.

The most important thing for good speech-to-text accuracy is clear sound. A clear voice with a good microphone will always work better than mumbling in a loud room.

Is Talking Faster Than Typing?

People wonder if talking to a computer is really faster than typing. For most of us, the answer is yes! We can all talk much faster than we can type. A good tool can keep up with you, turning your words into text right away.

Here are a few last tips to help your computer listen better:

- Practice helps: Some tools learn your voice the more you use them. So, the more you talk, the smarter it gets.

- Teach it your words: If you use special words for work or hobbies, find a tool that lets you add them to a list. This is a huge help.

- Get a good microphone: A good microphone is your best friend for getting clear sound. You don’t need a fancy one, but it will be much better than the one in your laptop.

If you need a tool for important work, especially on an Apple computer, our guide on Mac speech-to-text for professionals has more great tips. With a little setup, you can make your computer a super listener.